Making a digital face / Sefki Ibrahim

This is the process of my latest project entitled, ‘Making a Digital Face’ in which the goal was to create a photorealistic 3D bust of a female hero character. To get photorealistic results you have to rely on photographs/references:

I decided on the actress, Gemma Arterton as my main reference point as well as pictures of other female celebrities, portraits, and hairstyles to guide me along the process.

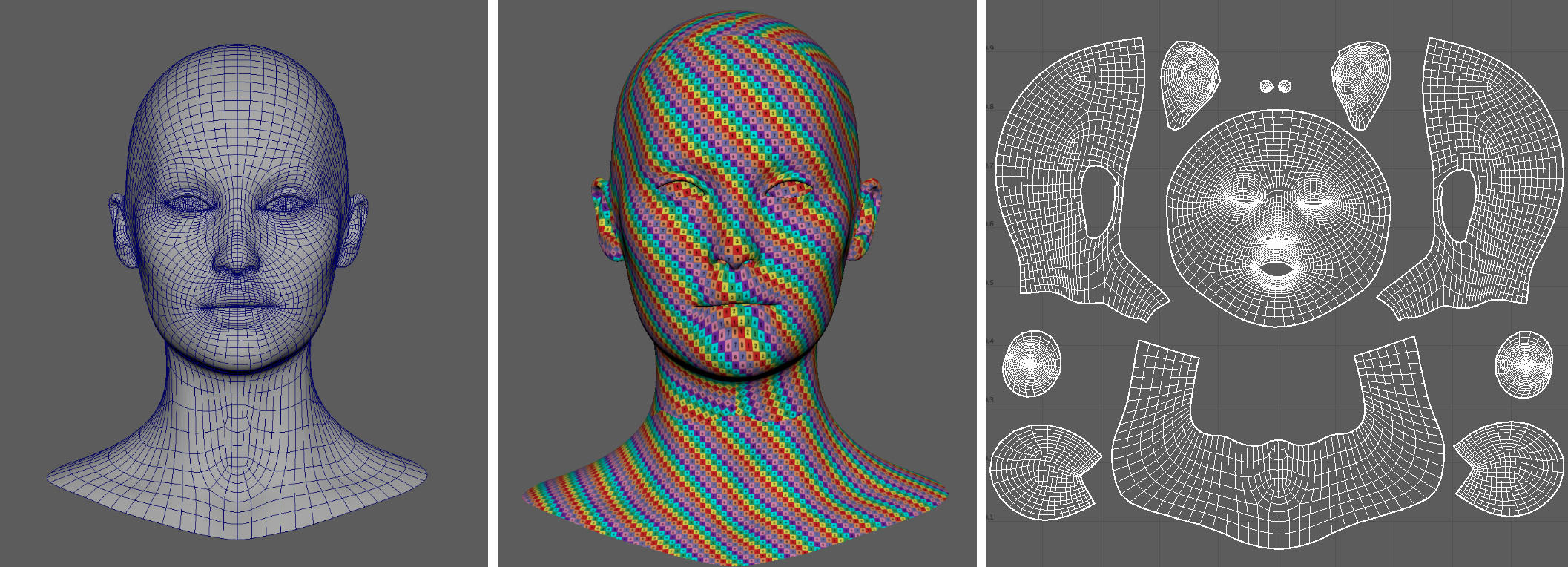

1. MODELING

The base mesh was modeled in Maya, I made sure to include topology for sculpting with clear edge-loops around the main features of the face. This base was then imported into ZBrush for sculpting. A solid understanding of facial anatomy is essential when sculpting a realistic face. I have been building my understanding of the forms of the face prior to this project through various digital painted studies. The head was sculpted in ZBrush by building up the planes of the face using the John Asaro’s heads as a reference. These sculptures break down the head into simple planes, which I find is a great way to create the initial blocking-out of the head. Once I was content with the sculpt, the model was taken back into Maya to be UV-mapped. I cut the model into a few shells (head, ears, neck and eyes and mouth cavity) and unfolded these UV projections to produce clean, undistorted UV components.

2. TEXTURING

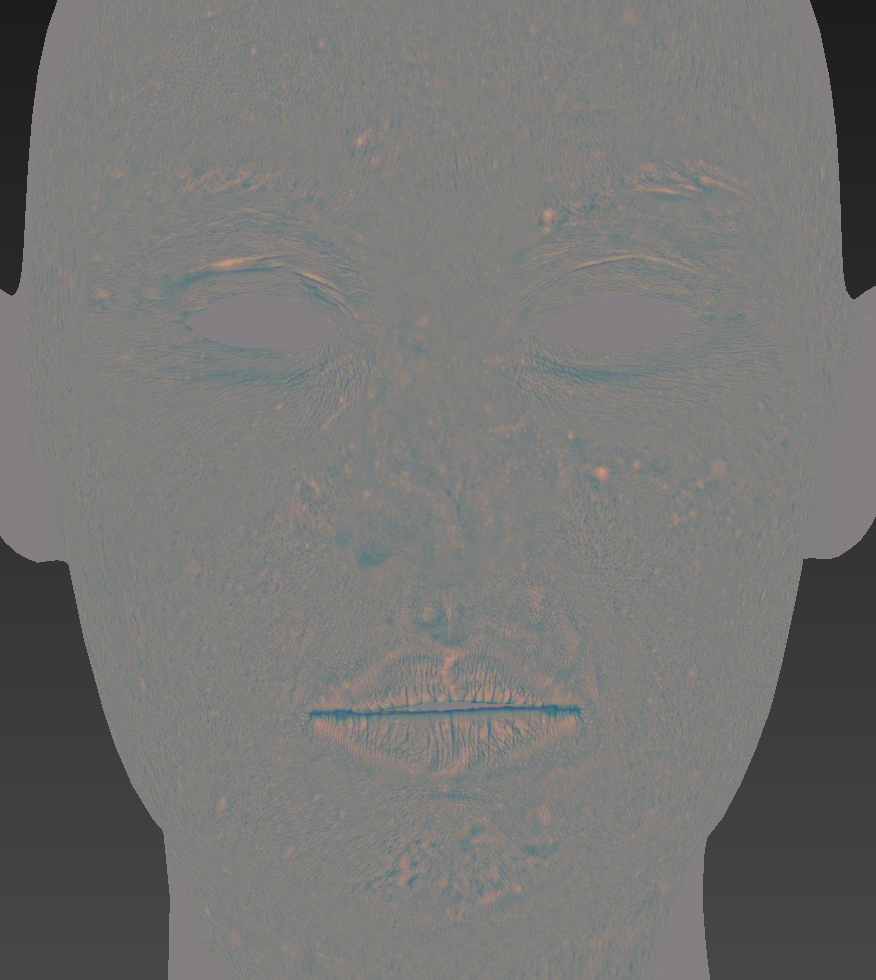

The texturing process is an important stage in the workflow of creating photorealism since low-quality/badly-painted textures can result in the ‘uncanny valley’. Hence, the most effective textures to use when creating photorealism is from photographs.DISPLACEMENT MAP

Texturing XYZ’s ‘20s Female’ scanned data pack was used to project onto the mesh in Mudbox.

First, I would convert each map to RGB and organize these scans in Photoshop; pasting the secondary, tertiary and micro detail maps into the red, green and blue channels respectively. This results in the map shown below.

This map was exported at 8K resolution and projected in Mudbox (using the projection brush) onto the correct areas of the face.

I then exported the map at 4K resolution using the settings shown below. The base mesh was exported along with it.

DIFFUSE MAP

Generating the diffuse map was an identical process to the displacement map. In this instance, I used Texturing XYZ’s ‘Female Face 20s #35’ crossed-polarized photo set to project onto the mesh in Mudbox. I made sure to use as many angles as possible when projecting to ensure the projection was clean and free of shadows.

The diffuse layer was then exported directly from Mudbox to Mari to apply some of Mari’s procedural layers to the skin. Artist, Beat Reichenbach gives a great explanation online about how to enhance the skin using procedural layers by adding veins, freckles, noise and color variation to the skin.

The color zones layer was inspired by a blog on the colour zones of the face by Gurney Journey: this blog discusses the three coloured undertones of the face. These zones comprise of a yellow tint on the forehead; redness for the nose and cheeks, and a blue/green tinge for the mouth and chin. Since these colour zones are subtler in women than men, the layer was reduced to a low opacity but gave the skin a different feel.

CREATING ADDITIONAL MAPS

Additional maps created for the skin involved layering cavity and ambient occlusion maps (exported from ZBrush) and manual paint-overs in Mari.

The specular map (left) is particularly important because, unlike the displacement and diffuse maps, its visibility does not subside from a distance, maintaining realism at all times. The shinier areas of the face were made whiter on the texture map, for example, the forehead, nose, eyebags, lips, chin and cheeks were highlighted. Some features were made lighter than others since these areas are more specular (nose is shinier than the chin). It’s important that the specular map provides an overall shininess to the face. The gloss map (right) is more specific as it represents the oiliness of the face. So, the nose, eyebags and forehead were accentuated here, with the addition of the bottom lip.

A further map was created which allowed me to control how much subsurface scattering occurs on the face when it reacts to light. Subsurface scattering is, in my opinion, a defining factor for photorealism but it can easily be overdone causing the skin to look waxy. Areas of the face that consist of bone scatters less light so are painted darker, whereas areas of the skin covering cartilage (septum, ears, etc.) are painted lighter.

3. LOOKDEV

Once all the maps were created, the model was imported into Maya and applied a ‘mesh smooth’. The subdivision type was set to ‘catclark’ and its iterations set to 2 (for now). Arvid Schneider provides in-depth shading tutorials online which have helped me to bring the character to life in Arnold.After the character was assigned an ‘aiStandardSurface’ material, the diffuse map was plugged into the ‘subsurface colour’; the specular map and gloss map were plugged into the specular weight and coat weight, respectively. From here the roughness and IOR values were adjusted to give the desired result (the IOR value for the skin comes as a preset in Arnold’s standard shader material, which is 1.4).

I stayed as faithful as I could to human skin by comparing to my reference images throughout. It’s easy to fall into the trap of producing something you think looks real but to get photorealistic results you have to study photographic reference. The final skin shader was constantly fine-tuned right to the very end; the process was completely iterative, I went between Photoshop, Mudbox, Mari and Maya to tweak the maps until I got the results I wanted.

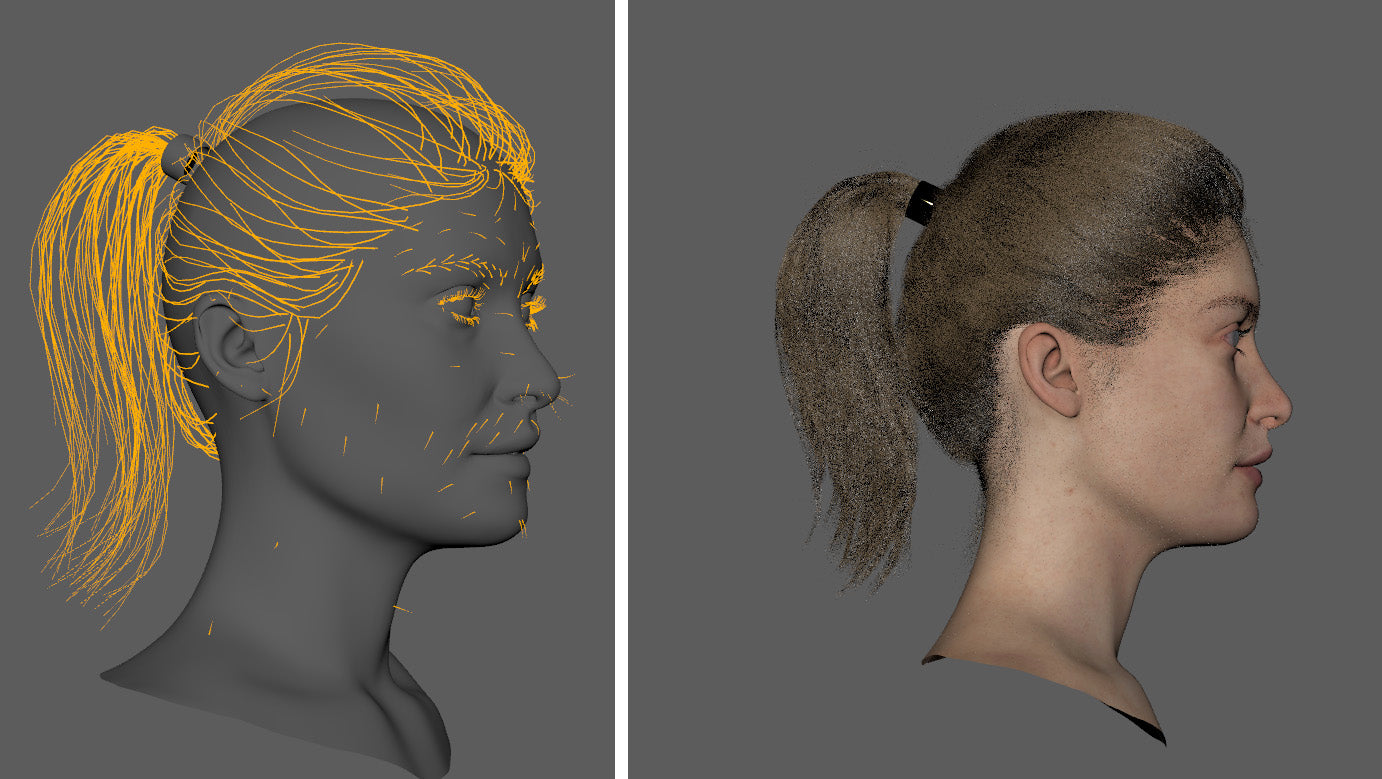

4. XGEN

EYEBROWS & EYELASHESThe first part of the XGen process was to create the eyebrows and eyelashes. I used the ‘place and shape guides’ feature within XGen. These guides are a geometry that can be moved and deformed easily. When activated, the XGen hairs are then interpolated according to the guides. Modifiers can then be added to insert noise into the hair; clump the hairs or cut the hairs so they have an uneven appearance. The guides for the eyebrows (shown below) demonstrate the direction that the eyebrows follow. As for the eyelashes, the upper eyelashes are denser than the lower lashes; a strong noise modifier was added to the lashes, so they could overlap and feel natural.

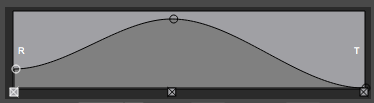

Hair naturally tapers, so it’s important to capture this using the width ramp; a lack of tapering is a big part of why hair can feel uncanny in 3D portraits. The width of nearly every groom created in this portrait used a width ramp similar to the one below.

Density maps were painted for every description so I could control exactly where I wanted the hairs to extrude from (black removes hair and white gives you the maximum density). Before creating the description, I would duplicate the head geometry and extract a section for the groom. For example, with the eyebrow groom, I extracted the eyebrow region of the mesh and discarded the rest. I then unfolded its UVs to increase its resolution. Painting density maps rely on UVs, so doing this ensures that you can paint on the mesh cleanly and easily.

MAIN HAIR

The hair was built using a layering of descriptions, from the base to the fine strands of the hairline. I had to study many images to capture the correct hair flow. Creating the main head of hair was definitely the most challenging aspect of this portrait, the main reason was that with the ponytail hairstyle, the hair is pulled back exposing the hairline. Accurately capturing the natural progression of the hair growing from the scalp is a tough feat and can make the portrait look uncanny. I made sure to create individual descriptions for the hairline, so I could have control over its appearance.

Like with the eyebrows/eyelashes, the hair tapers at the start and at the end, this helps in creating that natural hair growth, and as before, clump and noise modifiers were used to break its uniformity.

A technique I used to blend the eyebrows and the hairline better was to create an interactive groom. I made the width and length very low and posed the hairs so that they flowed in correct direction along the forehead. I would increase the width of the splines as they would approach the hair/eyebrow groom, which created this graduation that is seen in real hair.

With minimal XGen/groom experience prior to this project, I learned a lot from this process and hope to do a better job of creating realistic hair for my next project.

5. RENDERING

The scene was lit using a 3-point light setup (plus a subtle sky dome light). I wanted some shadow to be cast in the portrait because it highlights the contours of the face and makes the portrait appear less flat. Once the setup was established, the skin and hair shaders were adjusted and finalized.

For the final renders, a higher subdivision of the model was used with its subdivision type set to ‘catclark’ and iteration value set to 4. This allows the model to displace more detail, though it will increase render time. My render settings were as follows; these values were tried and tested and eventually settled upon.

I am fascinated by the great challenge of creating believable digital humans and crossing the dreaded ‘uncanny valley’. I intend to study further and share more digital humans with you in the future!

I hope you enjoyed the process of ‘Making a Digital Face’. Feel free to follow my work : https://www.artstation.com/sefki_i

| We would like to thank Sefki for his helpful contribution. If you're also interested to be featured here on a topic that might interest the community, feel free to contact us! |