Hello, I'm Braulio FG, also known as Brav FG. I'm a 3D artist from Costa Rica, specialized in characters for real-time rendering and motion capture. I start this journey by studying digital animation, at the same time I was working by doing motion graphics.

Later I decided to continue just with 3D and focus on characters, learning on my own.

Some of the projects I worked recently includes IpSoft´s digital human “Amelia”. Worked on a cinematic trailer for an unannounced project develop by polish studio RealTime Warriors. Created several real-time characters for a virtual exhibition fight developed by Hyperreality Inc.

Right now I’m the lead character artist at ROTU Entertainment for their upcoming video game “Rhythm of the Universe”. Besides that I’m also directing a real-time cinematic based on the ancient folklore of my country, that involves motion capture and 3D scanning. And I have some personal projects and tests that I share on my social media.

What is your personal approach to digital arts, and how are you connected to texturing?

My approach to 3D art often involved movement, I like to picture the characters in small animations and add some life to them. But that also means more steps in the process. Because it’s not just sculpting and rendering, but also all the rigging for motion capture, camera and video testing that need to be done.

For me, out of all those steps, texturing is one of the processes that I enjoy the most. Because I´m in control to freely add and fine tune details to make them have unique characteristics.

How did you first learned about TexturingXYZ? Did you have any specific reaction to it?

I first learn about TexturingXYZ materials because of some making of and tutorials I saw online. Before knowing about these resources, I was doing the traditional stencil projection and sculpting details by hand.

My initial reaction was surprising along with a bit of confusion, not knowing how to use them to my workflow. But the page provides very insightful step by step guides that help you achieve a quicker result. Since then I haven’t look back, but I still like to add details by hand to customize the final result.

What is the place of TexturingXYZ maps in your usual workflows? Which software do you use? Any tips for aspiring or professional Artists?

The maps from TexturingXYZ are now an important part of my workflow because it enhances the result in a very quick way. I use MARI to create all my textures related to skin. The reason I use it over other methods of projection is that for me MARI is like Photoshop, and I’m used to that workflow now. Is how I'm able to deliver fast results, and it also handles larger textures sizes very well.

In my opinion, TexturingXYZ´s materials do the job fast and they are really easy to apply. But I recommend to take the time to customize the result, clean the stretching marks and UV seams.

Can you explain your workflow when using motion capture and real-time rendering?

For real-time rendering, I use Unreal Engine 4, and along with Maya, I try different motion capture solutions, depending on what my goal is. These days we have access to some technology for a low price, so that gives us a broad option of solutions to integrate.

For example, you can use Microsoft Kinect for body and facial animation. The PS3 Eye Cameras for full body recording. The Leap Motion for hand animation, or even IphoneX for facial motion capture.

For body motion capture you record the movement of the actor, and the software you are using translates that movement to a skeleton. Then you export it as an FBX file and use it to drive the movement of your character´s rig.

That process is called retargeting, and in Maya, I use the HumanIK feature to do this step. After that, you can use animation layers if the movement needs some fine tuning. You can also go Live inside UE4 with their recent added plugin.

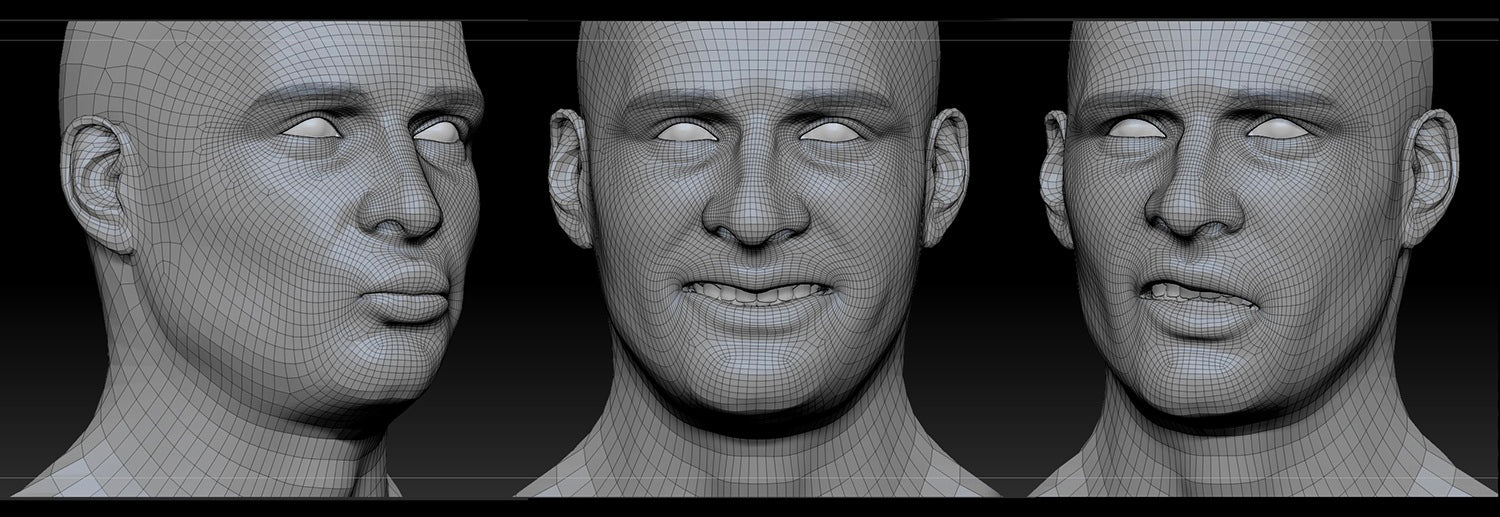

For the face, it’s a little bit different, since you need blendshapes and also joints to create the expressions, rotate the eyes, head, jaw etc. Facial mocap also needs retargeting, in Maya I connect them using constraints to the facial rig controls. And for live facial motion capture inside UE4, you can use blueprints to do the retargeting.

In your opinion, what are the differences between texturing for

non-animatable characters against texturing for motion capture?

When texturing a character probably you only think about the most used maps we already know of, like normal maps, color, roughness or specular.

But when you are texturing for motion capture or animation, especially on the face, you need to create some extra maps. You can add wrinkle maps, used to achieve a more convincing result when the skin folds on certain expressions. There’s also compression maps, for how the blood flow and skin compress on some poses.

On top of that, you can add extra fixing maps for specific areas of the face. A quick example will be the eyelids when the character closes the eyes, usually, the projected texture stretches, so you can have an extra texture to fix that.

All these textures are driven by masks and trigger by blendshapes. Usually, these types of maps are generated out of scan data or photos, but some can be done manually.

Do you think real-time rendering limits texturing?

One of the downsides of real-time rendering, compared to a more traditional approach of rendering, is the textures size and polygonal count its able to handle, especially for video games.

The most used texture sizes are 2k or 4k, I´d even worked with 8K maps for real-time rendering. But it’s important to recall that not always more texture size is the solution. It’s also very important to plan how to layout your UVs to achieve a good result.

So, for now, GPUs can handle only to a certain amount of texture sizes. But this only has been improving over the past years, so I believe that limit can be overcome soon.

In your experience, using 3D scanned models for production

eliminates the need for texturing on characters?

Photogrammetry or 3D scanning is used mostly to save time, and saving time is equal to saving money. This method helps to get the likeness and expressions faster, as the subtle details of the face as well. So it solves a lot of steps at once, and the result depends if the artist knows how to work with 3D scans. Because by themselves scans are not amazing, most of them come damaged, need cleanup, retopology, UV mapping, texture transfer, etc.

So it’s a very important step in production, but not just the only one. Some more traditional or classical methods still need to be applied. I don’t rely on just the textures captured by the scans, I try to enhance the result with extra texture projections and cleanup.

On your artistic journey, from the start until today, did you

find other artists that inspired you, or still, provide you the will to keep looking over the horizon?

I had always admired artists, not only on the digital world but also on the traditional field, like sculpture, painting and even music. I find inspiration on other character artists, but also on other topics like cinematography and image composition. Like I said I’m always trying to think on art in motion.

That’s why right now I’m looking up to the work done by Neill Blomkamp and Oats Studios. Also, the work of Erasmus Brosdau is really inspiring. Because they are using real-time rendering to tell stories on short cinematic chapters. For production, they are using applied technology such as 3D scanning and motion capture, both for body and facial animation. It’s a very similar approach to what I like to do. So in the end, I take a little bit of each area to find inspiration and try to improve all the time.

Are there still challenges you face in your art today?

Art in all its forms it’s a never-ending challenge, doesn’t matter if you are starting out or have a bit more of experience. You will always have something new to learn or improve. Right now one of the challenges I’m facing is the amount of time it takes to complete some steps that maybe I’m not that familiarized with.

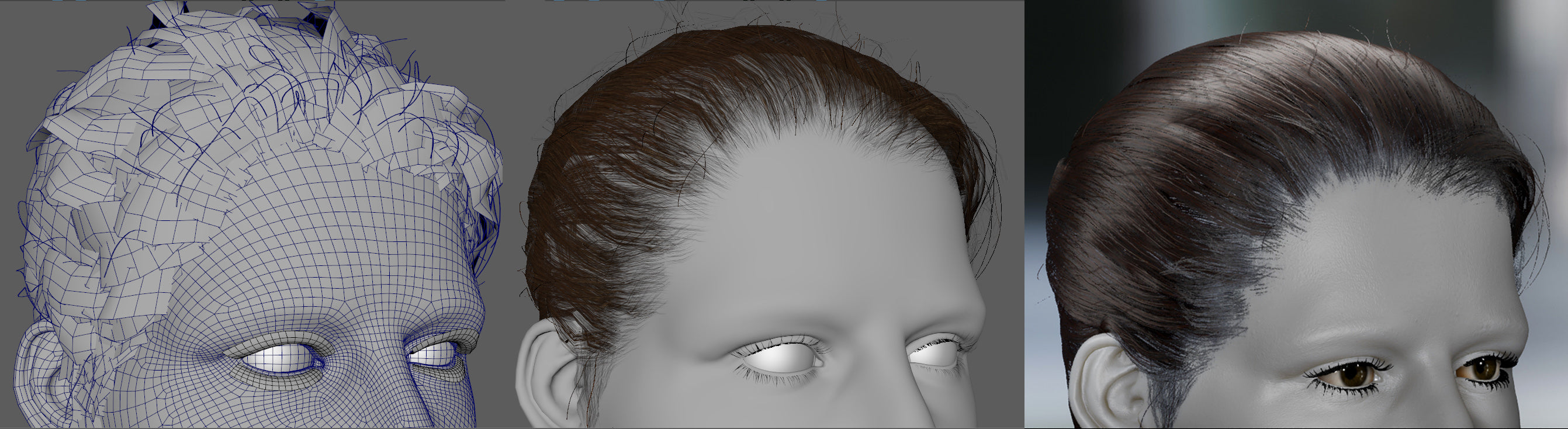

One example of that would be hair creation. This process consumes a lot of time because hair planes need to be placed manually and there’s a lot of fine tuning to be made. So I definitely have the goal to improve in this area.

How do you picture the industry in 5 years? Do you feel any tendencies or boundaries that could exist?

Right now I see a tendency towards real-time rendering. The graphics cards shown each year are getting better and better. Not only able to handle more polygon counts, more texture resolution, but also real-time reflections and shadows. So even though we are not quite there yet, maybe soon real-time rendering could be used not just for video games.

On the motion capture area, the next big thing to put in the hands of artists and developers is the technology of video scanning. Is about scanning every position of the body for a period of time. So if someone is scanned while talking, then it will create 24 scans per second of video. The result is a sequence of 3D models that appear to be moving. Now, that’s been used already, this is nothing new, but is out of reach to low-end developers or average 3D artist.

Soon you would be able to do this using your cellphone camera, just like right now, you are able to do good photogrammetry. With video scanning, you no longer need facial motion capture, facial rigging or separate expressions. You could save a lot of time and steps and the results are incredible.

Thanks for reading, hope you learn something new and decide to implement motion capture or real-time rendering on your projects. If you have any more questions or want to see more of my work you can follow me on social media.

ArtStation : https://www.artstation.com/bravfg

Facebook : https://www.facebook.com/BraVlio.FG

Instagram: https://www.instagram.com/bravfg_3dart/

| We would like to thank Brav for his work and helpful contribution. If you're also interested to be featured here on a topic that might interest the community, feel free to contact us! |